Introduction

As we embark on a transition from a traditional VM setup hosted at TransIP to the scalable cloud infrastructure of Microsoft Azure, our journey is as much about discovery as it is about performance. Guided by the expertise of Pionative, we're not just moving our environment; we are attempting to reinvent it in Azure, ensuring that every step we take is carefully tested and analyzed. Our goal? To ensure optimal performance in this new architecture and to uncover any potential bottlenecks or hidden issues that might lurk within our new cloud architecture as early as possible.

Azure regions and Zones

Azure's infrastructure spans the globe, organized into regions for localized services, and further divided into zones for high availability. Regions are specific geographical areas hosting Azure services, while zones are physical locations within these regions, each with independent utilities to isolate from failures.

The Western Europe Region

The Western Europe region in Azure is structured into three distinct availability zones. These zones provide an additional layer of redundancy and fault tolerance by physically separating data centers within the region. This setup is designed to ensure that even in the event of a complete zone failure, the services can continue to operate unaffected in the other zones, offering robust disaster recovery solutions for businesses operating in Europe. Where Zone 1,2 and 3 are exactly located geographically is unknown to me.

Performance anomaly in the Western Europe Region

Our testing regime led us to a anomaly that has become the focal point of our Azure exploration for this post: a distinct latency issue within Zone 3 of the Western Europe region. This discovery wasn't immediate; it emerged from days of performance testing and a keen observation of patterns that deviated from our expectations. This all because we just started to learn the way’s of Azure. And it’s difference in performance as opposed to our current setup.

We first started to exclude our own pods, code, performance settings within the node pool. Database performance settings and such.

Methodology and findings

Our final approach was quite thorough to verify this result. We deployed two node pools across each of Microsoft Azure's three zones within the Western Europe region, aiming to rule out any node-specific variances. All these pools had the exact same performance setup.

Through extensive testing, including a battery of database calls and running our cron jobs integral to our application's functionality, we noticed a consistent and surprising pattern. Irrespective of the zone's reported capacity or congestion levels.

Interactions with the database were significantly slower when either the database or the connecting pods were situated in Zone 3.

Performance results

Below is our situation running a Cronjob that takes us locally within Docker around 32 seconds to run against our current setup, which isn’t comparable to MS Azure. But it did provide us a performance Matrix and goal. The script (cronjob) we ran fires around 32,000 queries to build a feed from all the products on our website. Yes, that’s a lot, but that is not the matter in this case. We wanted to uncover the performance issue. We know this script can be optimized to perform better.

MySQL Database in Zone 2

Zone 1 – pool 1: 33 seconds

Zone 1 – pool 2: 32 seconds

Zone 2 – pool 1: 32 seconds

Zone 2 – pool 2: 32 seconds

Zone 3 – pool 1: 115 seconds

Zone 3 – pool 2: 117 seconds

Then, to put the icing on the cake, we switched the setup with a database running in Zone 3. And see below the expected results we hoped to see to conclude this search.

MySQL Database on Zone 3

Zone 1: 120 seconds

Zone 2: 122 seconds

Zone 3: 33 seconds

We started to see the same consistency every time, even when we ran less but larger database calls.

MySQL Database on zone 2

16 DB queries single threaded.

Zone 1: Total time for queries from log: 9.57 ms

Zone 2: Total time for queries from log: 9 ms

Zone 3: Total time for queries from log: 49.96 ms

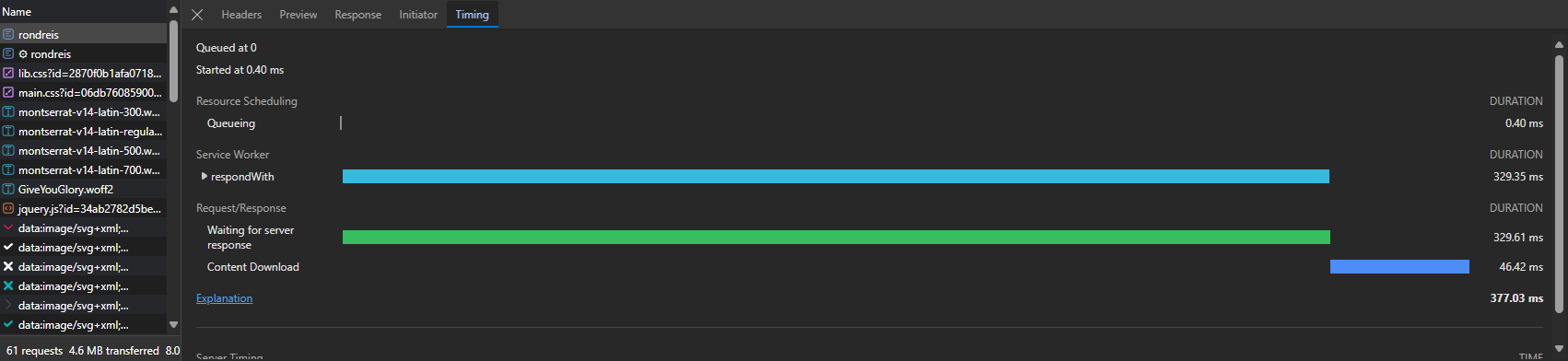

Website performance in Azure

When we run our Database in Zone 2 while having the API pod in Zone 1, the initial load time for our website decreases by at least 400 milliseconds for the first page load, compared to the configuration where the API pod is in Zone 3.

This means that the setup with the database in Zone 2 and the API pod in Zone 1 results in a much faster initial response time for the website compared to having the API pod located in Zone 3 and the Database in Zone 2.

There is a margin of error here, but we can conclude from these results that when you want to run your API demographically separated in two zones, there is no way you can consistently perform when using Zone 3. Zones 1 and 2 are the way to go.

We must be honest that this performance anomaly only happens when you have a pod with a database connection. Running our website (which has no database connection) in Zone 3 measured almost no impact here when the API pod ran in Zone 1 and the database resided in Zone 2.

Diving deeper in Zone 3's latency issues.

This performance discrepancy in Zone 3 was astonishing to us. According to Microsoft's best practices, the physical proximity of application servers to their respective databases within the same region should minimize latency. Our findings, however, painted a different picture, suggesting that within-region zone selection could profoundly impact application performance.

Our investigation was quite comprehensive, eliminating potential variables such as our own codebase, PHP environment configurations, MySQL performance, and much more. The consistent latency issues, particularly affecting PHP's single-threaded cron jobs, finally pointed us towards a more systemic issue within Zone 3's network architecture or deployment somewhere.

Collaboration and learning

Our journey, backed by the collaboration with Pionative, underscores the importance of partnership in navigating the cloud's complexity. As we dove deeper into this latency anomaly, our shared efforts aim not only to optimize our deployment but also to contribute our findings back to a broader Azure community.

This latency impact is too large to ignore in our production environment.

Implications and next steps

This anomaly in Zone 3 opens up discussions on optimal zone selection and deployment strategies within Azure's Western Europe region. Is this the reason that Zone 3 is the least populated zone? Online there isn’t much to find about this it looks like. So i wrote this post since this cost us literally days to pinpoint this performance difference.

This will impact our production environment. We are going to run our DB in Zone 1 or 2. and the API must reside in either of these zones. Zone 3 can be used for pods that talk with the API.

This latency anomaly in Zone 3 was more then a challenge for us. We took it as a opportunity to learn, adapt, and optimize our knowledge. As we continue to collaborate, test, and refine our approach, our journey into Azure is shaping up to be a very interesting technical exploration which is broadening our toolset for the future.