I built a "production" app using Vibecoding. 97,089 lines generated. Only 25,056 made it to production. Four complete refactors. The AI didn't fail because of bad prompts. It failed because AI forgets, loses context, and creates duplicate logic no matter how well you guide it. Vibecoding isn't about letting AI build for you. It's about knowing your system well enough to filter the noise. Without that knowledge, you're only shipping guesses.

What Vibecoding actually means

People think vibecoding means letting AI build your app while you watch. Reality check kicking in here, this is not at all the case if you need an actual working product with the expected result every time.

Vibecoding still means you take control. You prompt with precision. You experiment. You guide. You filter. It's still hands-on work, just with different leverage.

"A fool with a tool is still a fool"

And if you don't know what you're doing? The result is the same as it always was: a mess. Vibecoding without the right knowledge is just plain wrong. And the main problem with AI, is that it keeps producing code after code after code. It just does what you ask. it isn't critical about that. So if you don't know what you are doing you are contradicting yourself all the time with programmed functionality that never works together within your app.

Ai is king in fallbacks. I would have had 5 different fallbacks for every api call that fails. Shouldn't we just make the right way work instead of making fallbacks. AI is also lazy, it rather suppresses notices then fixing them. especially if it won't work on the first try.

I built a production app with AI (but not because of AI)

I used Windsurf to build an entire application. No human developers. No code written by me directly. we use Sonnet 4.5 as our knowledge.

The result after a lot of frustration: a full production game with backend logic, login flows, async data writes, mail queues, admin security, monitoring, and cartesian algorithms that update based on user interaction.

The AI wrote all of it. I haven't touched a single line of code. So even the simples css change. I let AI do it.

But here's what nobody tells often: I had to completely refactor the entire project four times. And not because I prompted poorly. I used KISS principles, DRY standards, PSR guidelines. We wrote decisions and created implementation documents on forehand. We used guidelines for the languages that we used.

It didn't matter in the end.

AI forgets. It loses context. It starts creating duplicate functions without realizing it already wrote that logic three files ago. The more complex your project gets, the more it drifts. And the more it isn't done right in the first attempt AI thinks it needs to maintain backwards compatibility, complicating your code even more. No matter how you prompt it the first time or with project rules.

Four complete refactors later, I learned: AI doesn't forget context. It never had it ;).

The only reason the final version works is because I knew exactly what I was building. I had the system in my head. I could spot when AI was repeating itself. I knew when to stop it, when to redirect it, when to start over. I checked every file for duplicate fallback data. duplicates and so on. I also used the very usefull npm package knip

https://www.npmjs.com/package/knip

You can't vibecode something into production without understanding your product. Period. And you need to accept that bug fixing is relying on your AI to fix it without breaking anything else, Good luck with that...

The data tells the rest of our story

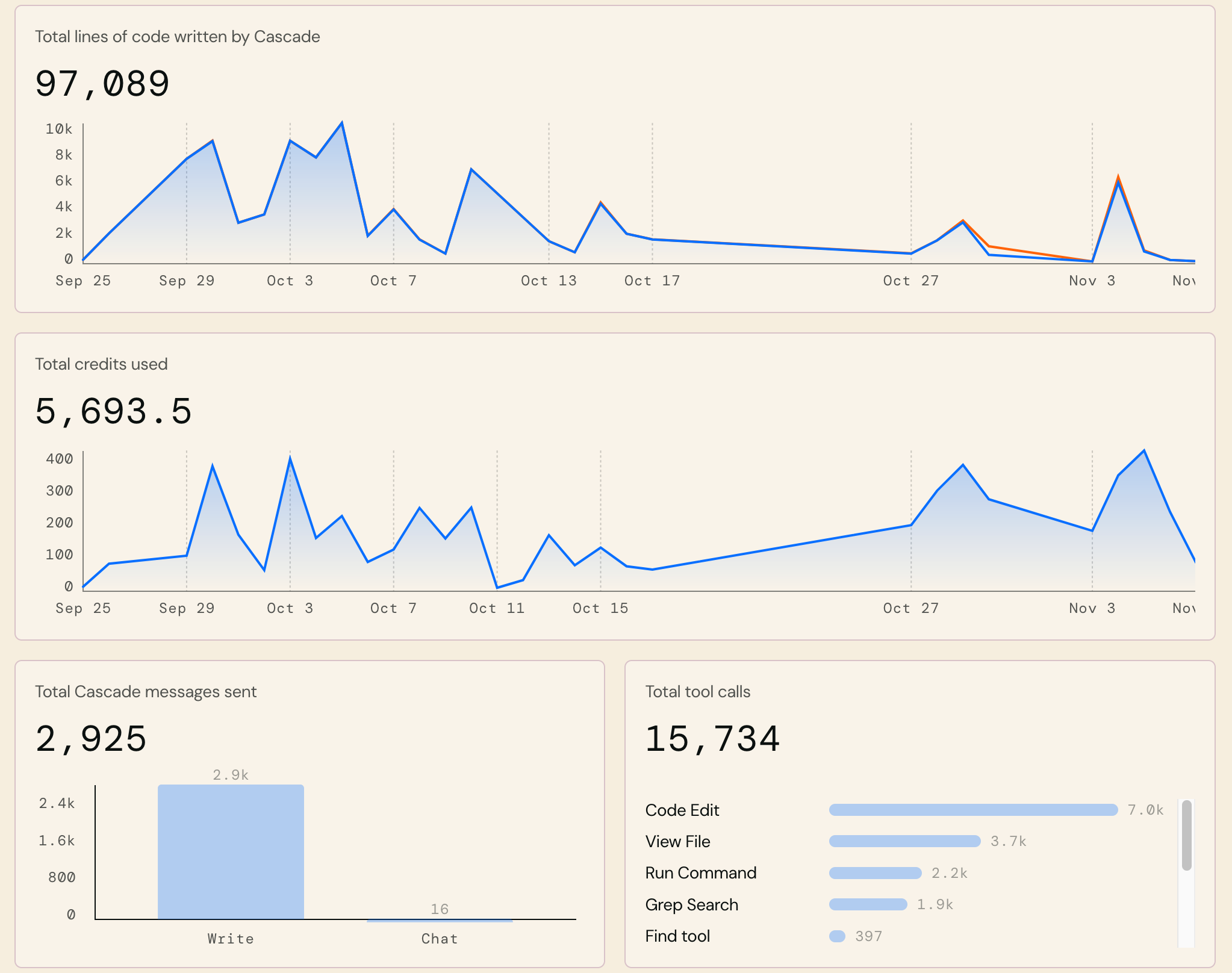

During our Vibecoding, Windsurf produced:

- 97,089 lines of code generated

- 2,925 prompts sent

- 5,693 credits used

- 15,734 tool calls (including 7,000 code edits)

What actually made it into production:

- 25,056 lines of code

Over 70,000 lines got scrapped, replaced or refactored.

Now, you might think this means you just need to "guide AI better" or "prompt more precisely." That's not the point I wanted to make.

The point is: you can't prevent AI spew right now. No matter how well you prompt. No matter how structured your approach is. AI will overproduce. It will generate redundant code. It will lose track of what it already built. It will create noise. AI is build to always post a form of AI Verbal Diarrhea.

That 70k+ lines of wasted output? That's not a bug. That's the current state of Vibecoding.

And if you don't know your system well enough to recognize what's garbage and what's not, you'll ship all of it and never be able to debug even with AI. Or worse, you'll spend personally weeks debugging a codebase you don't understand.

The costs per 1000 credits are around 35 dollar. The development of this game cost me 210 dollar. Every company delivering Large Language Models is losing money. the code I produced should easily have costed 210 x 10 =2.100 dollar at least. I even wonder if they then even made money then.

Apart from the money, is this really worth our precious electricity? I am wondering if you pay the real price, how feasible this will be.

What people get wrong

AI isn't magic. It's a "smart", fast assistant. But it doesn't understand what matters to your business. It doesn't grasp edge cases, constraints, risks, performance implications, or security vulnerabilities.

That's your job!

In my case, AI helped build something real. But the AI didn't secure the admin panel. It didn't design the mail queue logic. It didn't decide when to debounce async writes. I did. By being critical and asking the right questions due to build experience in the past.

Without that direction, the entire thing would have collapsed.

And I see this pattern everywhere. Teams building AI products without ownership or direction, just to say they've "done something with AI." It's shallow. It's fragile. It doesn't scale.

"You're not building products. You're laundering prompts into deployments"

How many times don't you hear from higher hand: We need to do something with AI. We are falling behind. Behind of what?

The customer service trap

Then there's also the AI customer service hype. Companies claim it's perfect for 24/7 support, faster responses, fewer humans needed.

But if you need a bot to talk to your customers, your real problem is probably somewhere else. AI doesn't fix broken processes. It hides them. Temporarily.

If you think less human contact is a win, you've already lost. If replying to questions takes a long time you might have to look at other things why people have so many questions and improve on that first.

The actual point

Vibecoding is just another tool. That's all it is. A very cool tool in the right hands tho, but still a tool. And mainly good for prototyping, but with a good dosis of knowledge you can maybe produce standalone apps that actually work, and are at least testable by you and the outcome is traceable to the code written in a transparant way.

It doesn't replace thinking. It should amplify it, and it shouldn't make you think less.

Used right, it can be powerful. But you still need to know what you want to build. You need to own the structure. You need to filter the noise. You need to recognize AI spew when you see it. You need to lead the process.

Otherwise, you're not building a prototype. You're just generating guesses.

The future isn't AI-first. The companies that matter stay human-first. They understand the problem before picking the tool. And they keep asking the only question that counts before reaching out for AI to help them:

What are we actually trying to solve?